In this project we will try to scrape data from reddit using their API. The objective is to load reddit data into a pandas dataframe. In order to achieve this, first we'll import the following libraries.

The documentation for this API can be found here.

import pandas as pd

import urllib.request as ur

import json

import time

We can access the raw json data of any subreddit by adding '.json' to the URL. Using the urllib.request library, we can extract that data and read it in python.

From the documentation, we need to fill out the header using the suggested format.

Example: User-Agent: android:com.example.myredditapp:v1.2.3 (by /u/kemitche)

#Header to be submitted to reddit.

hdr = {'User-Agent': 'codingdisciple:playingwithredditAPI:v1.0 (by /u/ivis_reine)'}

#Link to the subreddit of interest.

url = "https://www.reddit.com/r/datascience/.json?sort=top&t;=all"

#Makes a request object and receive a response.

req = ur.Request(url, headers=hdr)

response = ur.urlopen(req).read()

#Loads the json data into python.

json_data = json.loads(response.decode('utf-8'))

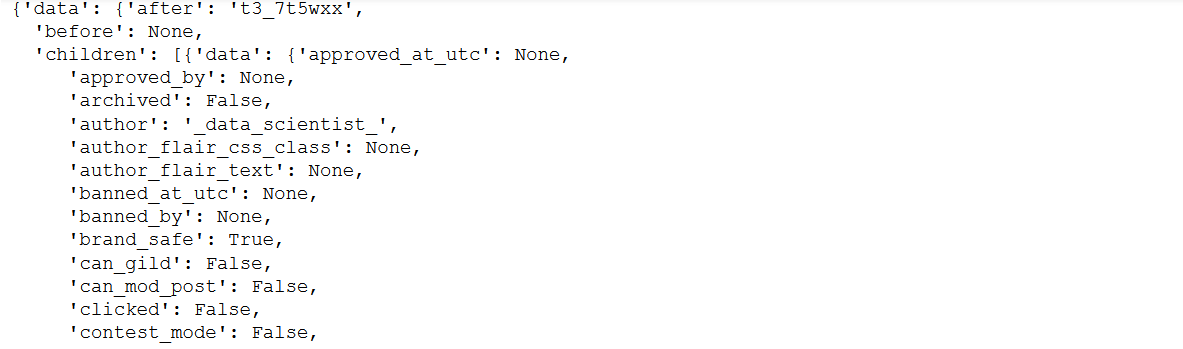

I took a snapshot of the data structure below. It looks like the data is just a bunch of lists and dictionaries. We want reach the part of the dictionary until we see a list. Each item on this list will be a post made on this subreddit.

#The actual data starts.

data = json_data['data']['children']

Each request can only get us 100 posts, we can write a for loop to send 10 requests at 2 second intervals and add the data to the list of posts.

for i in range(10):

#reddit only accepts one request every 2 seconds, adds a delay between each loop

time.sleep(2)

last = data[-1]['data']['name']

url = 'https://www.reddit.com/r/datascience/.json?sort=top&t;=all&limit;=100&after;=%s' % last

req = ur.Request(url, headers=hdr)

text_data = ur.urlopen(req).read()

datatemp = json.loads(text_data.decode('utf-8'))

data += datatemp['data']['children']

print(str(len(data))+" posts loaded")

We've assigned all the posts to a list with the variable named 'data'. In order to begin constructing our pandas dataframe, we need a list of column names. Each post consists of a dictionary, we can simply loop through this dictionary and extract the column names.

#Create a list of column name strings to be used to create our pandas dataframe

data_names = [value for value in data[0]['data']]

print(data_names)

In order to build a dataframe using the pd.DataFrame() function, we will need a list of dictionaries.

We can loop through each element in 'data', using each column name as a key to the dictionary, then accessing the corresponding value with that key. If we come across a post that has

#Create a list of dictionaries to be loaded into a pandas dataframe

df_loadinglist = []

for i in range(0, len(data)):

dictionary = {}

for names in data_names:

try:

dictionary[str(names)] = data[i]['data'][str(names)]

except:

dictionary[str(names)] = 'None'

df_loadinglist.append(dictionary)

df=pd.DataFrame(df_loadinglist)

df.shape

df.tail()

Now that we have a pandas dataframe, we can do simple analysis on the reddit posts. For example, we can write a function to find the most common words used in the last 925 posts.

#Counts each word and return a pandas series

def word_count(df, column):

dic={}

for idx, row in df.iterrows():

split = row[column].lower().split(" ")

for word in split:

if word in dic:

dic[word] += 1

else:

dic[word] = 1

dictionary = pd.Series(dic)

dictionary = dictionary.sort_values(ascending=False)

return dictionary

top_counts = word_count(df, "selftext")

top_counts[0:5]

The results are not too surprising, common english words showed up the most. That is it for now! We've achieved our goal of turning json data into a pandas dataframe.

Learning Summary¶

Concepts explored: lists, dictionaries, API, data structures, JSON

The files for this project can be found in my GitHub repository

Comments

comments powered by Disqus